Isaac Asimov developed theThree Laws of Robotics:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws.

Philosopher Jonny Thomson suggests one more:

4. A robot must identify itself.

This isn’t really a thing in most factories. The robots? They’re the ones with the gigantic mechanical arms. But there are cases in which robots might need to identify themselves as robots.

AI art should, Thomson thinks, be identified as such. Google is tagging AI-generated images as such, with the goal of reducing the spread of misinformation. That wasn’t really the Pope in that white puffer coat, and it wasn’t Elon Musk kissing a robot either. Google thinks you should be able to look into the information for an image and see just what kind of manipulation it has undergone.

AI-generated writing and music should also be identified, by the same token. A healthcare robot should be identifiable as a robot, rather than giving the impression that it is being remotely handled by a human being. As Ai and robotic work is done in ways that can’t be identified immediately for what they are, the ethical requirement to identify robots grows.

In real life

This question has come up with the use of robots for elder care. Elli-Q, a robot companion that can alert elderly owners’ relatives to alarming situations, is designed to be friendly and helpful, but also distinctly a robot. Dor Skuler, co-founder and chief executive of Intuition Robotics, the makers of Elli-Q, told the BBC, “I don’t see the link between trying to fool you and trying to give you what you need. ElliQ is cute, and it is a friend. From our research, an objectoid can still create positive affinity and alleviate loneliness without needing to pretend to be human.”

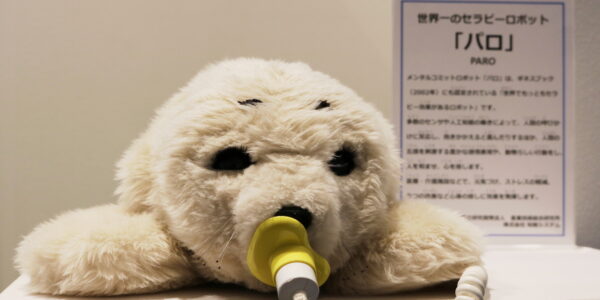

But research continues to show that people will anthropomorphize robots every chance we get. Some studies have expressed concern that dementia patients could be fooled by a robot. For example, a study of dementia patients’ responses to a robotic seal turned up examples of people who seemed to believe that the seal was alive or could have thoughts and feelings. A humanoid robot could elicit the same type of response.

However, in this example, the human handlers of the companion robot seals clearly stated that the seal was a robot. “It has sensors in its whiskers,” they would say. The identification did not appear to prevent the patients’ feelings of connection with the mechanical seals.

This should not surprise us, if we consider the close connections people feel with their phones, their cars, and other devices. We know the phone is not alive, but many of us sleep with the phone close at hand and would return home for it if we forgot it. Identification may simply not be enough to keep us from emotional connection with robots.

At least we’ll know what we’re getting ourselves into, if we agree to the proposed Fourth Law of Robotics.