Both New York and New Jersey have new laws regulating the use of AI hiring algorithms for hiring.

What are hiring algorithms?

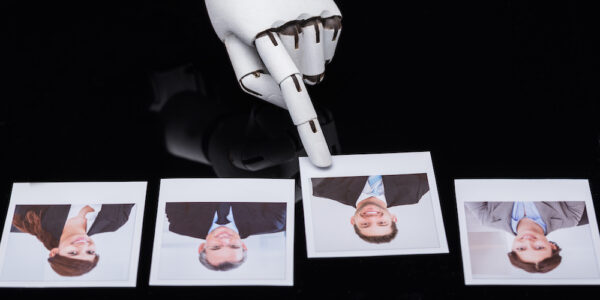

Hiring algorithms are computer programs used by employers to screen job applicants, evaluate their qualifications, and decide who to hire. While these algorithms have the potential to make hiring more efficient and objective, they can also perpetuate biases and discrimination if not designed and implemented properly. In recent years, concerns about the impact of hiring algorithms on fairness and equity have led to calls for regulations to govern their use.

Inhuman bias

One of the main issues with hiring algorithms is that they can be biased against certain groups of people. This can occur in a number of ways. For example, if the algorithm is trained on historical hiring data, it may learn to favor applicants who resemble past successful candidates, who may have been predominantly from certain demographic groups. Similarly, algorithms that rely on certain criteria, such as years of experience or educational background, may disadvantage candidates who come from non-traditional or underprivileged backgrounds.

Another concern is that hiring algorithms can perpetuate stereotypes and reinforce systemic inequalities. For example, an algorithm that screens out candidates who use certain words or phrases may unfairly penalize individuals from certain cultural or linguistic backgrounds. Similarly, an algorithm that evaluates facial expressions or vocal tones during video interviews may discriminate against candidates with disabilities or differences in appearance or speech.

Regulations

To address these issues, some jurisdictions have begun to regulate the use of hiring algorithms. For example, the European Union’s General Data Protection Regulation (GDPR) includes provisions that govern the use of automated decision-making systems, including hiring algorithms. The GDPR requires organizations to be transparent about their use of automated systems, to provide individuals with the right to contest decisions made by algorithms, and to ensure that algorithms do not perpetuate discrimination.

Similarly, in the United States, the Equal Employment Opportunity Commission (EEOC) has issued guidance on the use of hiring algorithms. The EEOC advises employers to ensure that their algorithms are job-related and consistent with business necessity, to evaluate algorithms for disparate impact on protected groups, and to periodically review and update algorithms to ensure that they remain valid and unbiased.

Several states in the US have also enacted laws regulating the use of hiring algorithms. For example, Illinois’ Artificial Intelligence Video Interview Act requires employers to obtain informed consent from job candidates before using video interviews that rely on AI analysis of facial expressions or vocal tones. California’s Fair Employment and Housing Act prohibits discrimination on the basis of protected characteristics, including race, gender, and disability, and requires employers to provide clear explanations for automated hiring decisions.

The new laws in New York and New Jersey call for bias audits before hiring algorithms are put into use, as well as requiring this kind of analysis on an ongoing basis from companies that create the algorithms. Cathy O’Neil, author of Weapons of Math Destruction, includes secret algorithms in her definition of harmful big data tools. As long as people can’t tell what characteristics are being used to judge them, she says, they can’t defend themselves against the harm these systems can do — including disqualifying them for jobs. Including tests of the tools and explanations of their decisions in the regulations could reduce the danger. On the other hand, makers feel that these regulations could threaten their intellectual property.

Moving forward

Despite these regulations, concerns about the impact of automated hiring tools on fairness and equity persist. To address these issues, experts have called for greater transparency and accountability in the development and implementation of algorithms. This includes being transparent about the data used to train algorithms, allowing for external audits and reviews, and providing candidates with clear explanations for automated hiring decisions.

Additionally, experts have emphasized the importance of diversity and inclusion in the development and use of AI hiring systems. This includes ensuring that the teams developing algorithms are diverse and inclusive, and that algorithms are designed to mitigate biases and promote equity.

Finally, some have called for a re-evaluation of the role of hiring algorithms altogether. While these algorithms can be useful tools in the hiring process, they are not a panacea for the complex challenges of workforce diversity and inclusion. Rather than relying solely on algorithms, employers should adopt a more holistic approach to hiring that includes a range of assessment methods, including interviews, reference checks, and skills assessments.

Hiring algorithms have the potential to improve the efficiency and objectivity of the hiring process. However, they also have the potential to perpetuate biases and discrimination if not designed and implemented properly. To address these issues, governments and employers must take steps to ensure that these algorithms are transparent, fair, and unbiased, and that they promote diversity and inclusion.