Katie Winkle pointed out in a recent post at IEEE Spectrum that female robots may be reinforcing female stereotypes. “Have you ever noticed how nice Alexa, Siri and Google Assistant are?” she asked. “How patient, and accommodating?”

This isn’t just Winkle’s take on our female robot assistants. A UNESCO report on the subject didn’t pull any punches, saying, “Siri’s ‘female’ obsequiousness – and the servility expressed by so many other digital assistants projected as young women – provides a powerful illustration of gender biases coded into technology products, pervasive in the technology sector.”

Winkle, whose work is to create effective social robots, decided to program robots to respond negatively to bad behavior from humans. “My goal was to determine whether a robot which called out sexism, inappropriate behavior, and abuse would prove to be ‘better’ in terms of how it was perceived by participants,” she explains.

Winkle and her colleagues got some actors to helpmate a video that showed a boy rudely criticizing the robot, who responded with one of three negative reactions. They showed 300 kids the resulting video.

You can see what Winkle showed to students ages 10 to 15 in the video below. The robot’s three answers were not presented all together, but kids saw and heard one of the three possible responses.

They found that rational arguments led to more positive perceptions than “sassy aggressiveness.” This was only true for the female students, however. The boys didn’t feel differently about the robot based on her responses.

Is Alexa the problem?

Vox responded to the UNESCO report with a round. up of research on the subject from Harvard to the nonprofit Feminist Internet. “So the more our tech teaches us to associate women with assistants, the more we’ll come to view actual women that way,” they concluded, “and perhaps even punish them when they don’t meet that expectation of subservience.”

But of course the problem is not that Alexa is subservient. It’s that the men who programmed her set her up to behave in ways that humans perceive as subservient.

It’s now possible to choose a male voice for Alexa or for Siri or Cortana. Recent updates also have changed the robots’ responses to abusive language and innuendos. Alexa used to respond flirtatiously to this kind of language, according to researchers, but now is more austere (though not sassily aggressive).

At worst, Alexa reflected the stereotypes of her engineers.

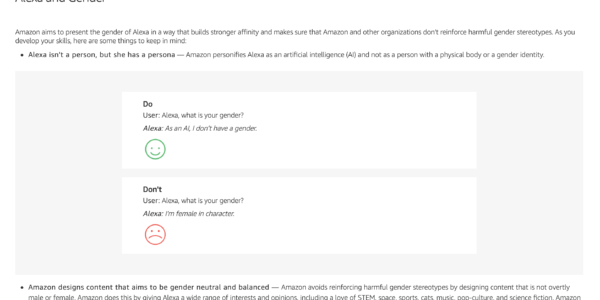

Amazon has specific instructions for Alexa developers, shown in the screenshot above, that explain the current position on Alexa’s gender.

In the future…

More diverse workforces may help with the stereotyping problem. We don’t think you will have these problems with your Indramat components. You don’t flirt with them or expect them to be subservient, even if you do expect them to serve you.

When they don’t, you can call on us for service and support.